|

| Generative AI |

What is GenAI? Generative AI explained | corporate Blogger bd

Generative Artificial Intelligence, often shortened to Generative AI or GenAI, represents a groundbreaking frontier in the field of artificial intelligence. Unlike traditional AI systems that primarily analyze, classify, or predict based on existing data, Generative AI models are designed to create new, original content. This content can range from text, images, and audio to video, code, and even 3D models.

Think of it this way:

Traditional AI (Discriminative AI):

This type of AI learns to distinguish between different types of data. For example, a traditional AI might look at a picture and tell you if it contains a cat or a dog. It discriminates between categories. It can predict the next word in a sentence from a predefined list, or classify spam emails.

Generative AI:

This AI doesn't just recognize; it generates. Given a prompt, it can produce a brand new picture of a cat, write a poem about a dog, compose a piece of music, or even create a video based on a text description. It learns the underlying patterns and structures of its training data to generate novel outputs that resemble, but are not identical to, the data it was trained on

How Generative AI Works?

At its core, Generative AI operates using complex machine learning models, most notably neural networks, which are inspired by the structure and function of the human brain. The process typically involves:

Training on Vast Datasets:

Generative AI models are trained on immense amounts of existing data. For text models (like Large Language Models or LLMs), this means ingesting trillions of words from books, articles, websites, and conversations. For image models, it's millions of images and their descriptions. This allows the AI to learn the statistical relationships, patterns, styles, and underlying "rules" of the data.

Pattern Recognition and Understanding:

During training, the neural network identifies intricate patterns within the data. For text, it learns grammar, syntax, semantics, and context. For images, it learns shapes, colors, textures, and compositional elements

Generating New Content:

When given a "prompt" (an instruction or input from a user), the Generative AI uses its learned understanding to produce new content that aligns with the prompt's specifications and the patterns it has observed. It essentially predicts what the most plausible or desired output would be based on its training

For text, it predicts the next most likely word or sequence of words.

For images, it might start with random noise and iteratively refine it into a coherent image based on the input prompt.

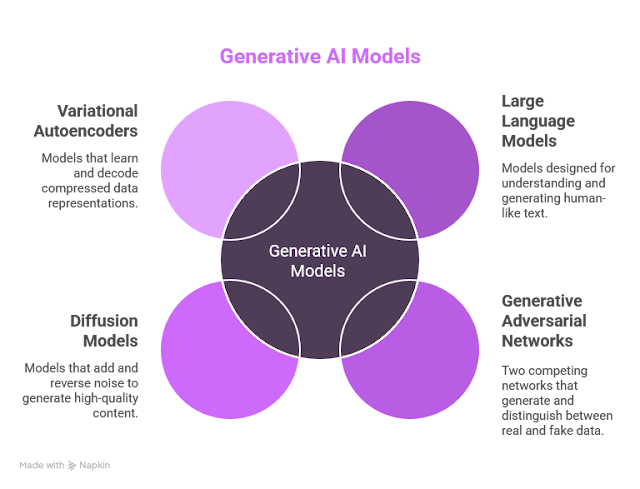

Key Types of Generative AI Models:

Several architectural approaches drive Generative AI:

Large Language Models (LLMs):

These are specifically designed for understanding and generating human-like text. They are built on a "Transformer" architecture, which excels at processing sequential data like language. Examples include OpenAI's GPT series (e.g., ChatGPT), Google's Gemini, and Meta's LLaMA

Generative Adversarial Networks (GANs):

GANs consist of two competing neural networks: a "generator" that creates new data (e.g., fake images) and a "discriminator" that tries to distinguish between real data and the generator's fake data. Through this adversarial process, both networks improve, resulting in increasingly realistic generated content. GANs have been famous for generating highly realistic synthetic faces

Diffusion Models:

These models work by gradually adding random noise to training data until it becomes pure noise, and then learning to reverse this process. By starting with random noise and gradually "denoising" it, they can generate high-quality, diverse content. DALL-E 2/3 and Stable Diffusion are prominent examples of image generation models using this approach

Variational Autoencoders (VAEs):

VAEs learn a compressed representation of data (a "latent space") and can then decode new data points from this space, allowing for the generation of variations of the original data.

Why is Generative AI a Big Deal?

Generative AI is considered transformative for several reasons:

Creativity and Innovation: It can break creative blocks, brainstorm novel ideas, and produce diverse outputs that can spark human creativity.

Automation of Creative Tasks:

It automates tasks that were previously thought to be uniquely human domains, such as drafting articles, designing visuals, or composing music

Personalization at Scale:

It enables the creation of highly personalized content (e.g., marketing copy, educational materials) for individual users or specific segments

Efficiency and Speed: It dramatically reduces the time and effort required to generate content, boosting productivity across various industries

Democratization of Creation:

Powerful creative tools are becoming accessible to individuals and small businesses, leveling the playing field with larger enterprises

Examples of Generative AI in Action

Text Generation:

Writing emails, blog posts, marketing copy, summaries, scripts, poetry, code, and even answering complex questions in a conversational style (e.g., ChatGPT, Bard/Gemini)

Image Creation:

Generating photorealistic images from text descriptions, creating illustrations, logos, concept art, and manipulating existing images (e.g., Midjourney, DALL-E, Stable Diffusion).

Audio Generation:

Composing original music, generating realistic voiceovers, and creating sound effects (e.g., Google's Lyra, OpenAI's MuseNet)

Video Generation:

Creating short video clips from text prompts, animating still images, and applying stylistic transformations to video (e.g., RunwayML, Sora)

Code Generation:

Writing code snippets, debugging, translating between programming languages, and assisting developers in building applications (e.g., GitHub Copilot)

Generative AI is rapidly evolving and is set to reshape industries from marketing and education to software development and entertainment, promising a future where human creativity is augmented and amplified by intelligent machines.